Tongyi Qianwen, the company’s LLM series known also as Qwen, is used in industries ranging from consumer electronics to cars and online games, according to Zhou Jingren, technology chief at Alibaba Cloud, the intelligent-computing arm of Post owner Alibaba.

Qwen “is truly embraced by our enterprise customers and we have seen so many creative applications of the models from across the industries”, Zhou said at a company event held in Beijing on Thursday.

More than 2.2 million corporate users also have access to Qwen-powered AI services through DingTalk, Alibaba’s office collaboration platform, the company said.

LLM is the technology that underpins a new generation of AI tools, such as OpenAI’s ChatGPT and Sora. Since the Microsoft-backed start-up unveiled its cutting-edge service in late 2022, Big Tech companies and smaller ventures in China have been scrambling to catch up by developing and promoting similar services.

Alibaba launched Qwen a year ago, weeks after rival Baidu introduced its Ernie Bot, which is used by 85,000 corporate clients, according to company founder and CEO Robin Li Yanhong in early April this year.

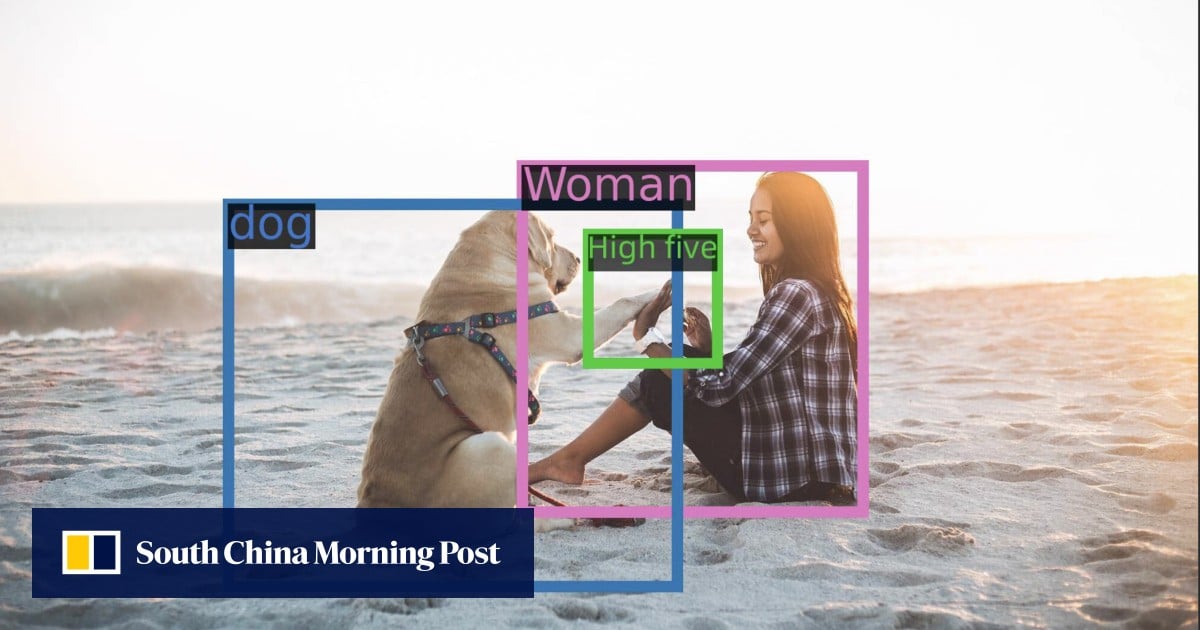

Alibaba’s Zhou said the company’s efforts to enhance its LLMs’ capabilities have enabled clients to come up with innovative applications of AI.

Qwen also supports new features in Xiaomi’s latest smartphone models, including the ability to analyse images of food and generate corresponding recipes, Alibaba said.

Other major Qwen clients include Beijing-based video game developer Perfect World Games.

On Thursday, Alibaba also unveiled an updated version of its LLM, Tongyi Qianwen 2.5, which the company said performs better in various Chinese capabilities than GPT-4, which is OpenAI’s most advanced model open to the public.

The Hangzhou-based company also launched several open-source models of various sizes, including the Qwen1.5-110B, which was trained with 110 billion parameters, making it one of the largest Chinese open-source models.

A larger number of parameters generally translates into more complex AI models. Most mainstream Chinese open-source models have been trained with 7 billion to 14 billion parameters.